Microsoft’s SAMBA Model Redefines Long-Context Learning for AI

:::info Authors:

(1) Liliang Ren, Microsoft and University of Illinois at Urbana-Champaign (liliangren@microsoft.com);

(2) Yang Liu†, Microsoft (yaliu10@microsoft.com);

(3) Yadong Lu†, Microsoft (yadonglu@microsoft.com);

(4) Yelong Shen, Microsoft (yelong.shen@microsoft.com);

(5) Chen Liang, Microsoft (chenliang1@microsoft.com);

(6) Weizhu Chen, Microsoft (wzchen@microsoft.com).

:::

Table of Links

Abstract and 1. Introduction

-

Methodology

-

Experiments and Results

3.1 Language Modeling on vQuality Data

3.2 Exploration on Attention and Linear Recurrence

3.3 Efficient Length Extrapolation

3.4 Long-Context Understanding

-

Analysis

-

Conclusion, Acknowledgement, and References

A. Implementation Details

B. Additional Experiment Results

C. Details of Entropy Measurement

D. Limitations

\

Abstract

Efficiently modeling sequences with infinite context length has been a long-standing problem. Past works suffer from either the quadratic computation complexity or the limited extrapolation ability on length generalization. In this work, we present SAMBA, a simple hybrid architecture that layer-wise combines Mamba, a selective State Space Model (SSM), with Sliding Window Attention (SWA). SAMBA selectively compresses a given sequence into recurrent hidden states while still maintaining the ability to precisely recall memories with the attention mechanism. We scale SAMBA up to 3.8B parameters with 3.2T training tokens and show that SAMBA substantially outperforms the state-of-the-art models based on pure attention or SSMs on a wide range of benchmarks. When trained on 4K length sequences, SAMBA can be efficiently extrapolated to 256K context length with perfect memory recall and show improved token predictions up to 1M context length. As a linear-time sequence model, SAMBA enjoys a 3.73× higher throughput compared to Transformers with grouped-query attention when processing user prompts of 128K length, and 3.64× speedup when generating 64K tokens with unlimited streaming. A sample implementation of SAMBA is publicly available in https://github.com/microsoft/Samba.

1 Introduction

Attention-based models [VSP+17, BCB14] have dominated the neural architectures of Large Language Models (LLMs) [RWC+19, BMR+20, Ope23, BCE+23] due to their ability to capture complex long-term dependencies and the efficient parallelization for large-scale training [DFE+22]. Recently, State Space Models (SSMs) [GGR21, SWL23, GGGR22, GD23] have emerged as a promising alternative, offering linear computation complexity and the potential for better extrapolation to longer sequences than seen during training. Specifically, Mamba[GD23], a variant of SSMs equipped with selective state spaces, has demonstrated notable promise through strong empirical performance and efficient hardware-aware implementation. Recent work also shows that transformers have poorer modeling capacities than input-dependent SSMs in state tracking problems [MPS24]. However, SSMs struggle with memory recall due to their Markovian nature [AET+23], and experimental results on information retrieval-related tasks [FDS+23, WDL24, AEZ+24], have further shown that SSMs are not as competitive as their attention-based counterparts.

\ Previous works [ZLJ+22, FDS+23, MZK+23, RLW+23] have explored different approaches to hybridize SSMs and the attention mechanism, but none of them achieve unlimited-length extrapolation

\ ![Figure 1: SAMBA shows improved prediction up to 1M tokens in the Proof-Pile test set while achieving a 3.64× faster decoding throughput than the Llama-3 architecture [Met24] (a state-of-theart Transformer [VSP+17] with Grouped-Query Attention [ALTdJ+23]) on 64K generation length. We also include an SE-Llama-3 1.6B baseline which applies the SelfExtend [JHY+24] approach for zero-shot length extrapolation. Throughput measured on a single A100 80GB GPU. All models are trained on the Phi-2 [LBE+23] dataset with 4K sequence length.](https://cdn.hackernoon.com/images/null-za03493.png)

\ with linear-time complexity. The existing length generalization techniques [HWX+23, XTC+23, JHY+24] developed for the attention mechanism suffer from quadratic computation complexity or limited context extrapolation ability. In this paper, we introduce SAMBA, a simple neural architecture that harmonizes the strengths of both the SSM and the attention-based models, while achieving an unlimited sequence length extrapolation with linear time complexity. SAMBA combines SSMs with attention through layer-wise interleaving Mamba [GD23], SwiGLU [Sha20], and Sliding Window Attention (SWA) [BPC20]. Mamba layers capture the time-dependent semantics and provide a backbone for efficient decoding, while SWA fills in the gap modeling complex, non-Markovian dependencies.

\ We scale SAMBA with 421M, 1.3B, 1.7B and up to 3.8B parameters. In particular, the largest 3.8B base model pre-trained with 3.2T tokens achieves a 71.2 score for MMLU [HBB+21], 54.9 for HumanEval [CTJ+21], and 69.6 for GSM8K [CKB+21], substantially outperforming strong open source language models up to 8B parameters, as detailed in Table 1. Despite being pre-trained in the 4K sequence length, SAMBA can be extrapolated to 1M length in zero shot with improved perplexity on Proof-Pile [ZAP22] while still maintaining the linear decoding time complexity with unlimited token streaming, as shown in Figure 1. We show that when instruction-tuned in a 4K context length with only 500 steps, SAMBA can be extrapolated to a 256K context length with perfect memory recall in Passkey Retrieval [MJ23]. In contrast, the fine-tuned SWA-based model simply cannot recall memories beyond 4K length. We further demonstrate that the instruction-tuned SAMBA 3.8B model can achieve significantly better performance than the SWA-based models on downstream long-context summarization tasks, while still keeping its impressive performance on the short-context benchmarks. Finally, we conduct rigorous and comprehensive analyzes and ablation studies, encompassing up to 1.7 billion parameters, to validate the architectural design of SAMBA. These meticulous investigations not only justify our architectural designs but also elucidate the potential mechanisms underpinning the remarkable effectiveness of this simple hybrid approach.

2 Methodology

We explore different hybridization strategies consisting of the layers of Mamba, Sliding Window Attention (SWA), and Multi-Layer Perceptron [Sha20, DFAG16]. We conceptualize the functionality of Mamba as the capture of recurrent sequence structures, SWA as the precise retrieval of memory, and MLP as the recall of factual knowledge. We also explore other linear recurrent layers including Multi-Scale Retention [SDH+23] and GLA [YWS+23] as potential substitutions for Mamba in Section 3.2. Our goal of hybridization is to harmonize between these distinct functioning blocks and find an efficient architecture for language modeling with unlimited-length extrapolation ability.

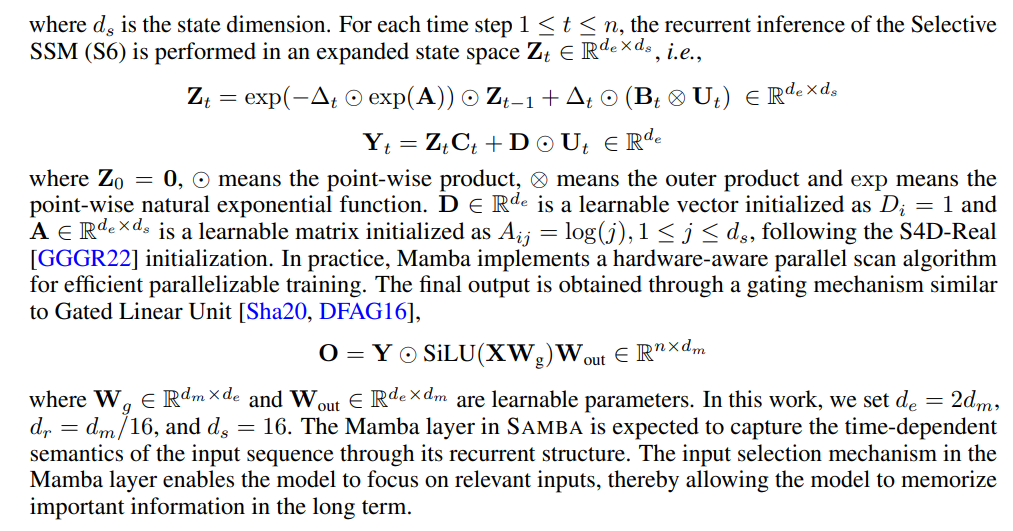

2.1 Architecture

As illustrated in Figure 2, we explore three kinds of layerwise hybridization strategies on the 1.7B scale: Samba, Mamba-SWA-MLP, and Mamba-MLP. We also explore other hybridization approaches with full self-attention on smaller scales in Section 4. The number of layers N is set to 48 for Samba, Mamba-MLP, and Mamba, while Mamba-SWA-MLP has 54 layers, so each model has approximately 1.7B parameters. We only modify the layer-level arrangement for each of the models and keep every other configuration the same to have apple-to-apple comparisons. More details on the configuration of each layer are explained in the following subsections.

\ ![Figure 2: From left to right: Samba, Mamba-SWA-MLP, Mamba-MLP, and Mamba. The illustrations depict the layer-wise integration of Mamba with various configurations of Multi-Layer Perceptrons (MLPs) and Sliding Window Attention (SWA). We assume the total number of intermediate layers to be N, and omit the embedding layers and output projections for simplicity. Pre-Norm [XYH+20, ZS19] and skip connections [HZRS16] are applied for each of the intermediate layers.](https://cdn.hackernoon.com/images/null-wi034ed.png)

\ 2.1.1 Mamba Layer

\

\

\ 2.1.2 Sliding Window Attention (SWA) Layer

\ The Sliding Window Attention [BPC20] layer is designed to address the limitations of the Mamba layer in capturing non-Markovian dependencies in sequences. Our SWA layer operates on a window size w = 2048 that slides over the input sequence, ensuring that the computational complexity remains linear with respect to the sequence length. The RoPE [SLP+21] relative positions are applied within the sliding window. By directly accessing the contents in the context window through attention, the SWA layer can retrieve high-definition signals from the middle to short-term history that cannot be clearly captured by the recurrent states of Mamba. We use FlashAttention 2 [Dao23] for the efficient implementation of self-attention throughout this work. We also choose the 2048 sliding window size for efficiency consideration; FlashAttention 2 has the same training speed as Mamba’s selective parallel scan at the sequence length of 2048 based on the measurements in [GD23].

\ 2.1.3 Multi-Layer Perceptron (MLP) Layer

\ The MLP layers in SAMBA serve as the architecture’s primary mechanism for nonlinear transformation and recall of factual knowledge [DDH+22]. We use SwiGLU [Sha20] for all the models trained in this paper and denote its intermediate hidden size as dp. As shown in Figure 2, Samba applies separate MLPs for different types of information captured by Mamba and the SWA layers.

\

:::info This paper is available on arxiv under CC BY 4.0 license.

:::

∗Work partially done during internship at Microsoft.

†Equal second-author contribution.

You May Also Like

Tether Gold Breaks $2 Billion, Fueling Tokenized Asset Momentum

MetaMask Launches $30M Rewards Program on Mobile